MySQL and PostgreSQL on Docker: Complete DevOps Guide 2026

Published on Jan 6, 2026

MySQL and PostgreSQL on Docker: Complete DevOps Guide 2026

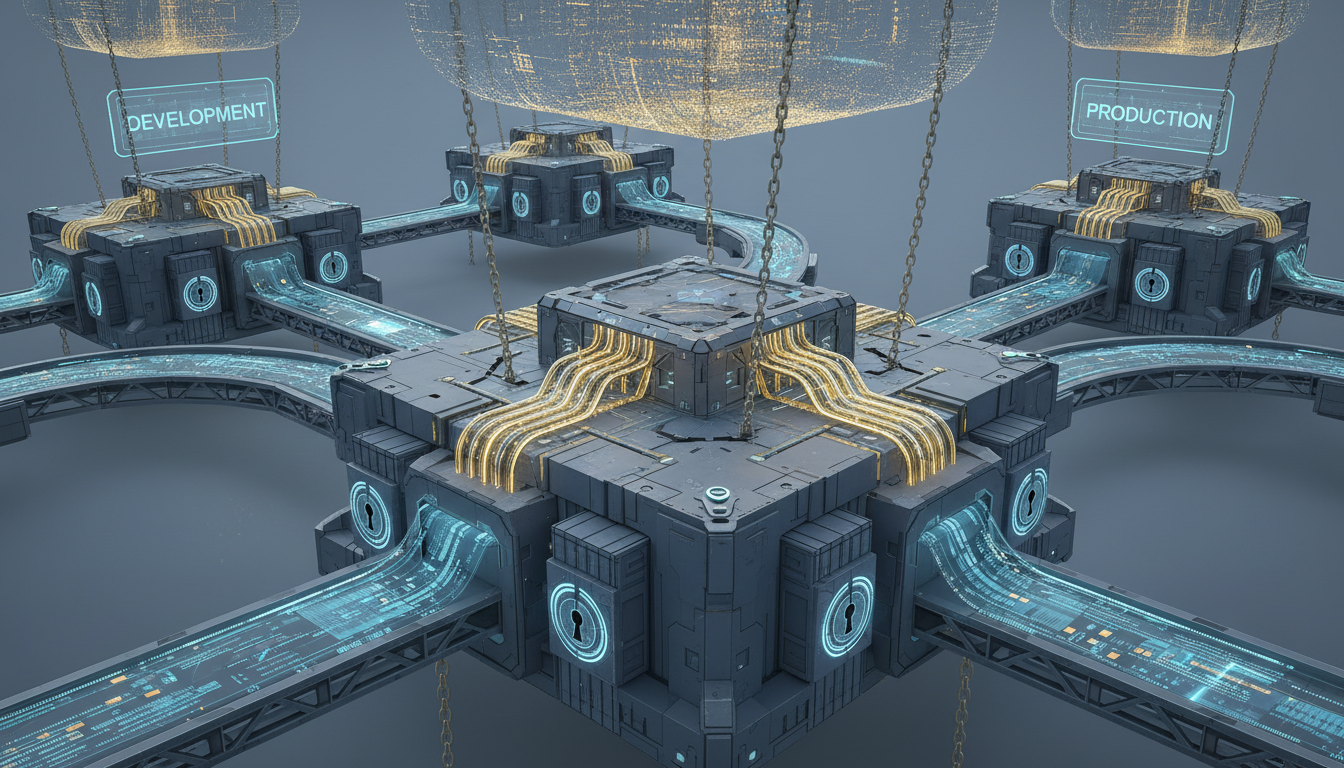

In the DevOps landscape of 2026, containerization remains a fundamental pillar. Managing MySQL and PostgreSQL databases on Docker offers agility, reproducibility, and scalability essential for modern workflows. This practical guide explains how to configure, run, and effectively manage these relational databases within Docker containers, perfectly aligning with automation solutions like Kuboide. You'll discover essential configurations, production best practices, and how to avoid common pitfalls, transforming your database management into a smooth process integrated into your DevOps cycle. Whether you're orchestrating microservices or optimizing development, testing, and production environments, mastering MySQL and PostgreSQL with Docker is an indispensable skill for any IT professional.

Table of Contents

- Why Use Docker for MySQL and PostgreSQL in DevOps Environments?

- Basic MySQL Configuration in a Docker Container

- Basic PostgreSQL Configuration in a Docker Container

- Best Practices for Containerized Databases in Production

- Automation and Management with Kuboide

Key Takeaways

- Isolation and Reproducibility: Docker ensures isolated and perfectly reproducible database environments across development, staging, and production.

- Simplified Management: Using official images and defining configuration through Docker simplifies MySQL and PostgreSQL installation and updates.

- Critical Persistence: Mounting persistent volumes is absolutely mandatory to preserve database data beyond the container lifecycle.

- Fundamental Security: Protecting credentials through environment variables and limiting port exposure are non-negotiable security practices.

- DevOps Integration: Solutions like Kuboide automate orchestration, deployment, and monitoring of these containers, seamlessly integrating them into the DevOps workflow.

Why Use Docker for MySQL and PostgreSQL in DevOps Environments?

Docker is essential for DevOps databases because it provides isolation, portability, and provisioning speed. In the DevOps context of 2026, the ability to create identical and independent environments for development, testing, and production is crucial. Containerizing MySQL and PostgreSQL eliminates version conflicts and dependencies between projects, ensuring that the database behaves exactly the same way wherever it runs – from the developer's laptop to the production cloud server.

The portability offered by Docker images is another decisive advantage. Once you define the image (using official MySQL and PostgreSQL images as a recommended starting point), you can run it without modifications on any host that supports Docker, whether it's AWS, Google Cloud, DigitalOcean, Hetzner, or a private server. This greatly simplifies migration between cloud providers or environment replication.

The speed of container startup and destruction is fundamental for efficient CI/CD workflows. Creating a temporary database instance to run integration tests or for a pull request preview becomes a matter of seconds. Similarly, updating the MySQL or PostgreSQL version often reduces to modifying the image tag in your Dockerfile or docker-compose.yml and restarting the container, a much more streamlined process compared to traditional updates on bare metal or VMs.

Finally, Docker integrates perfectly with DevOps orchestration and automation tools like Kuboide. Kuboide, with its Docker-native architecture, can automatically manage the database container lifecycle (start, stop, update), allocate resources (CPU, RAM) granularly, monitor consumption, and display logs in real-time, centralizing and simplifying otherwise complex operations. This alignment between containerization and automation represents the heart of modern DevOps efficiency.

Basic MySQL Configuration in a Docker Container

To run MySQL in a Docker container, use the official image from Docker Hub. The fundamental docker run command combines image definition, data persistence, and initial configuration. Here's a practical example:

docker run -d --name mysql-container \

-e MYSQL_ROOT_PASSWORD=my_secure_password \

-e MYSQL_DATABASE=my_database \

-e MYSQL_USER=my_user \

-e MYSQL_PASSWORD=user_password \

-v mysql_data:/var/lib/mysql \

-p 3306:3306 \

mysql:8.0Let's analyze the key options:

-d: Runs the container in detached mode (in the background).--name mysql-container: Assigns an identifying name to the container.-e MYSQL_ROOT_PASSWORD=...: Sets the root user password. This environment variable is mandatory for MySQL container startup. Choose a strong password.-e MYSQL_DATABASE=...: Automatically creates a database with the specified name at startup.-e MYSQL_USER=...and-e MYSQL_PASSWORD=...: Automatically create a non-root user with full privileges on the specified database (MYSQL_DATABASE). Improves security by avoiding root usage for applications.-v mysql_data:/var/lib/mysql: Creates a persistent Docker volume calledmysql_dataand mounts it to the/var/lib/mysqldirectory inside the container, where MySQL stores its data files. This is VITAL to avoid data loss when the container is stopped or deleted.-p 3306:3306: Exposes the container's port 3306 (MySQL's default port) on the Docker host's port 3306, allowing external applications to connect. Consider exposing it only on internal Docker networks for greater security.mysql:8.0: Specifies the official MySQL image and version (8.0 in this case). Replace withmysql:latestfor the latest stable version, but it's often better to pin a specific version for production stability.

Configuration Customization

To apply custom configurations (my.cnf), you can mount a custom configuration file or an entire directory to the appropriate path inside the container:

docker run -d --name mysql-container \

-v mysql_data:/var/lib/mysql \

-v /host/path/my.cnf:/etc/mysql/conf.d/custom.cnf \

-e MYSQL_ROOT_PASSWORD=my_secure_password \

mysql:8.0This mounts the custom.cnf file from your host machine into the container's /etc/mysql/conf.d/ directory, which MySQL automatically reads. This is useful for optimizing parameters like innodb_buffer_pool_size or enabling specific logs.

Basic PostgreSQL Configuration in a Docker Container

Running PostgreSQL in Docker follows similar principles to MySQL, using the official image. Here's the equivalent command:

docker run -d --name postgres-container \

-e POSTGRES_USER=my_user \

-e POSTGRES_PASSWORD=my_secure_password \

-e POSTGRES_DB=my_database \

-v postgres_data:/var/lib/postgresql/data \

-p 5432:5432 \

postgres:15Option details:

-d,--name: Same meaning as the MySQL example (background execution, container name).-e POSTGRES_PASSWORD=...: Sets the password for the default user (postgres). This variable is mandatory for PostgreSQL container startup. Use a strong password.-e POSTGRES_USER=...: Defines the username for the default user (replacespostgres). If omitted, the default user remainspostgres.-e POSTGRES_DB=...: Automatically creates a database with the specified name at startup, owned by the defined user (POSTGRES_USER).-v postgres_data:/var/lib/postgresql/data: Creates a persistent Docker volume calledpostgres_dataand mounts it to the/var/lib/postgresql/datadirectory inside the container, where PostgreSQL stores all its data and configuration files. This is the MOST IMPORTANT configuration to avoid data loss.-p 5432:5432: Exposes the container's port 5432 (PostgreSQL's default port) on the Docker host's port 5432. Like MySQL, consider exposure only on internal Docker networks for secure environments.postgres:15: Specifies the official PostgreSQL image and version (15 in this case). Usepostgres:latestfor the latest stable version, or pin a specific version for production.

Configuration Customization (postgresql.conf)

To customize PostgreSQL configuration, you can mount a custom postgresql.conf file or use specific environment variables (prefixed with POSTGRES_). The most direct method is mounting a file:

docker run -d --name postgres-container \

-v postgres_data:/var/lib/postgresql/data \

-v /host/path/postgresql.conf:/etc/postgresql/postgresql.conf \

-e POSTGRES_PASSWORD=my_secure_password \

postgres:15 -c 'config_file=/etc/postgresql/postgresql.conf'This command:

- Mounts the persistent volume

postgres_datafor data. - Mounts the custom configuration file

postgresql.conffrom your host machine to the/etc/postgresql/postgresql.confpath inside the container. - Passes the

-c 'config_file=/etc/postgresql/postgresql.conf'option to the PostgreSQL process inside the container, telling it to use your mounted configuration file instead of the default one. This is essential for parameters likeshared_buffers,work_mem, ormax_connections.

Best Practices for Containerized Databases in Production

Containerizing databases for production requires attention to persistence, security, resources, and resilience. Neglecting these aspects can lead to data loss, vulnerabilities, or downtime. Here are the fundamental best practices:

-

Absolute Persistence with Volumes: Never store database data in the container's temporary writable layer. ALWAYS use Docker volumes (

docker volume create) or bind mounts to persistent host directories (-v /host/path:/container/path) mounted on critical paths:- MySQL:

/var/lib/mysql - PostgreSQL:

/var/lib/postgresql/data - These volumes must be regularly included in your backups. Docker doesn't automatically manage volume backups.

- MySQL:

-

Secure Credential Management: Never insert passwords or secrets directly in the

Dockerfileordocker runcommands in plain text. Use:- Docker Secrets: (Particularly effective in Docker Swarm) to securely provide credentials to services.

- Environment Variables from Files: Use

--env-fileto load credentials from an external file (excluded from version control!) instead of-e PASSWORD=...in the command. Example:docker run --env-file db_secrets.env .... - Integrated Secret Managers: If using an orchestrator like Kubernetes or a platform like Kuboide, leverage their integrated mechanisms for secret management.

-

Network Exposure Limitation: Don't expose the database port (3306/5432) publicly unless strictly necessary. Better strategies:

- User-Defined Docker Networks: Create dedicated Docker networks (

docker network create my-db-net) and connect only the database container and application containers that need to access it. Don't expose the port on the host (-p). - Host/Cloud Firewall: If you must expose the port, rigorously configure the firewall (e.g., Security Groups on AWS, Firewall on GCP, UFW/iptables on the host) to allow connections only from specific and trusted IP addresses or networks.

- User-Defined Docker Networks: Create dedicated Docker networks (

-

Resource Control (CPU/RAM): Databases are resource-intensive. Limit container resources to prevent a hungry database from destabilizing the entire host:

--cpus: Limits the number of CPUs (e.g.,--cpus 2.0).--memory: Limits RAM (e.g.,--memory 4g). Always set a reasonable limit based on expected load.--memory-reservation: Sets a "soft limit" that Docker will attempt to respect.- Platforms like